Canonised data

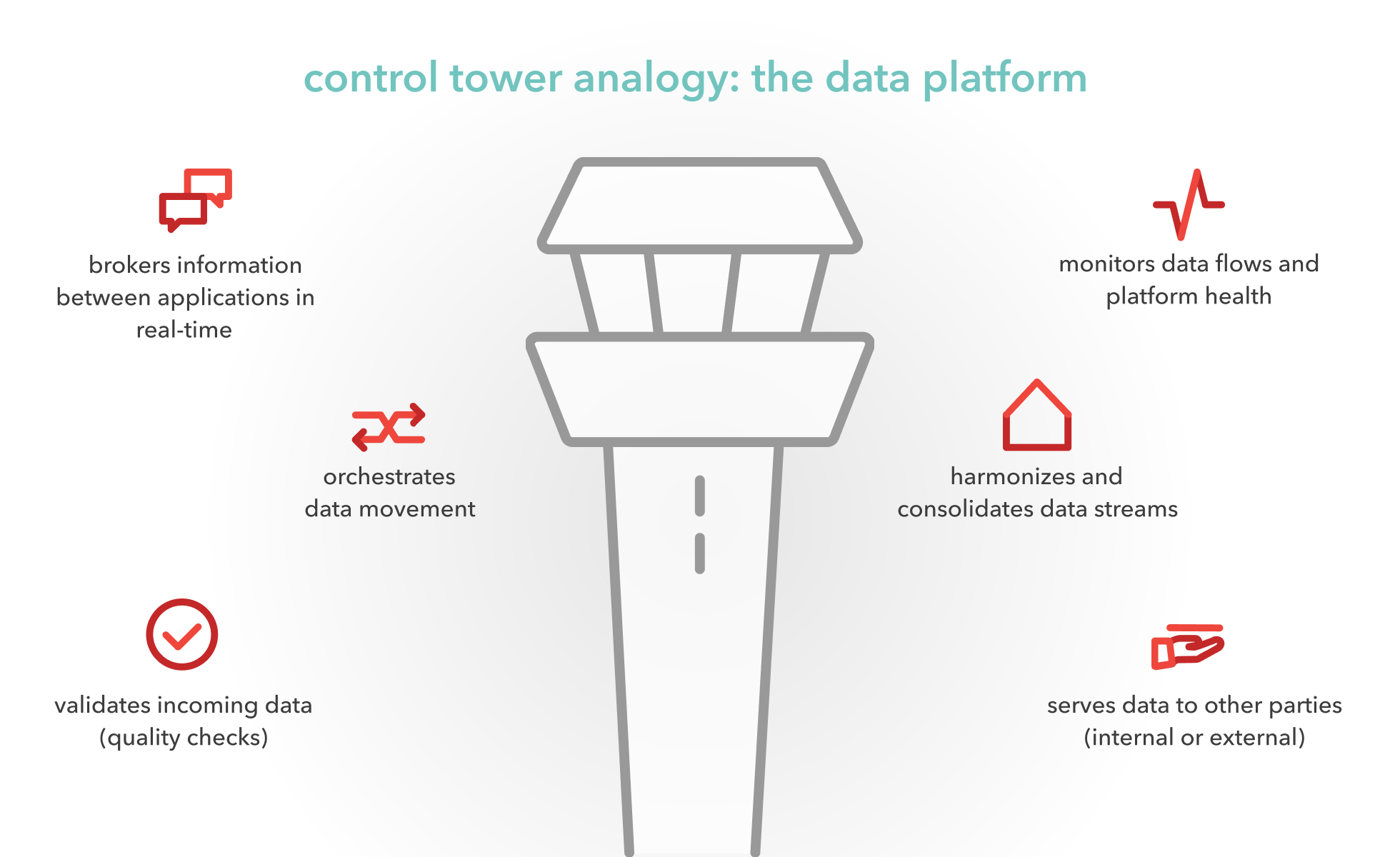

Extracting so-called ‘curated data’ would require only a few lines of code. “The ‘canonical data model’ focuses heavily on reusability,” Sebastiaan continues. “In this way, we ensure that each transformation needs to be defined only once, and that the resulting curated data can be consumed as re-usable data products. This allows data engineers, scientists, analysts and managers to focus on added value, instead of overhead tasks like data orchestration, exportation, and creating lineages.”

In essence, there are three ‘stages’ of data:

Stage 1 – Raw:

This is data in its native format, as received from its source. It’s unfiltered and impurified, before any transformation. It should be immutable and provided in a read-only format.

Stage 2 – Prepare:

Data in this stage is validated, standardised and harmonised, and has a high level of reliability. It consists of re-usable building blocks for logical data models.

Stage 3 – Serve:

This data is ready to be consumed by other systems: it’s optimised for reading and customised for specific use cases.